In Praise of the Static Map

You need to make a map.

And you want to use the web.

If you’re a geospatial professional it’s likely you misapprehend the task in 3 crucial ways:

- You will underestimate the time it will take to create an interactive map using one of the usual Javascript mapping APIs.

- You will overestimate the amount of time your audience will spend using your map.

- You will overestimate you audience’s enthusiasm for “interacting” with your map.

If time is money, then my money-making advice to you is…embrace the Static Map.

The Browser is Your IDE

If the phrase “Integrated Development Environment” doesn’t ring any bells, then Static Maps are a great place to start web mapping. Most of the well known services have Static Map APIs–Google Maps, Mapquest, Bing, Mapbox, et al–that are driven completely by URL strings entered into your browser. You simply specify parameters for height, width, zoom level, coordinates of your point/poly/line overlay, color, etc. , etc.

So, for instance, let’s draw a polygon around Walter White’s house in Albuquerque, New Mexico.

This is created simply using these URL parameters–

http://open.mapquestapi.com/staticmap/v4/getmap?

size=300,250

&type=sat

&zoom=17

&key=<your key here>

¢er=35.126,-106.5366

&polygon=

color:0xff0000|

width:2|

fill:0x44ff0000|

35.126018,-106.53676,

35.126012,-106.53636,

35.126207,-106.53636,

35.126213,-106.53676,

35.126018,-106.53676

Fairly straightforward, no? And since fundamentally what you’re doing is string manipulation, the door is open for you to become the danger by making URLs in bulk using some Excel CONCATENATE fu.

You are the Web Mapper Who Knocks.

Saving Time Means Getting Real

Many of my client needs include maps that the intended audience will spend about 8 seconds looking at. Sorry, but 8 seconds of viewing time means it’s not worth my time to cook up pretty D3 or fancy interactive controls.

Besides, they don’t know what those controls mean. Or how they work on their phone.

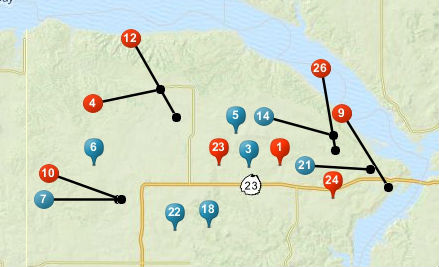

So here’s a swatch of oil well drilling activity in North Dakota: note that the (Mapquest) static map API automatically de-clutters the points for me. In real life this means I run dozens of these maps every night and I don’t have to qa/qc them. More rem sleep is a win for everyone.

82.3% of Interactive Maps Are Used To Make Screen Captures*

(*invented statistic)

Another unpleasant truth that web mapmakers dare not admit to themselves is that a depressingly large percentage of people use web maps to manually create screen captures to insert into a PDF or PowerPoint.

So let’s do them a solid and save them from MS Paint by giving them the static maps they really want.

If we have some basic coding chops we can make pretty PDFs (who doesn’t love a PDF?).

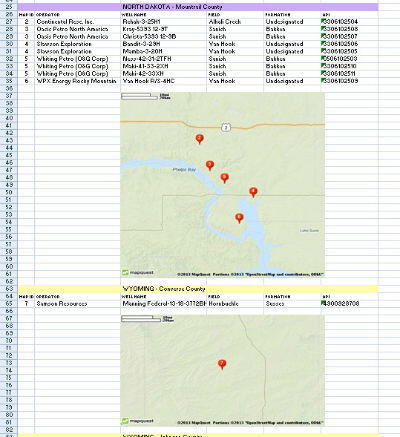

But with a little scripting elbow-grease we can also go to 11 by embedding maps inside Excel spreadsheets:

In this use case, the attribute data is as important as the geography–by simply scrolling down in Excel they can absorb and mentally filter on multiple variables all while sipping contentedly on their morning coffee.

When I suggested on Twitter that embedding static maps inside Excel workbooks represented cutting-edge Geo Business Intelligence, I received this glowing reply:

Hands-Free Time-Series Cartography

Because hating animated GIFs is hating life itself.

(Mean center of US population 1790-2020)

—Brian Timoney

Marc Pfister created a Google Maps Static API playground to help you get started.